With Velero now installed on our cluster, we can create a backup job and protect our data. We can also test restores of our data into a new namespace.

I mentioned in the previous post that we’re going to use File-System Backups (FSB) for the applications in our TKG cluster, and you’ll see how that’s handled here.

Velero works using the idea of “jobs” - either a backup job or restore job. Backup jobs can be run as a one off or triggered with a schedule.

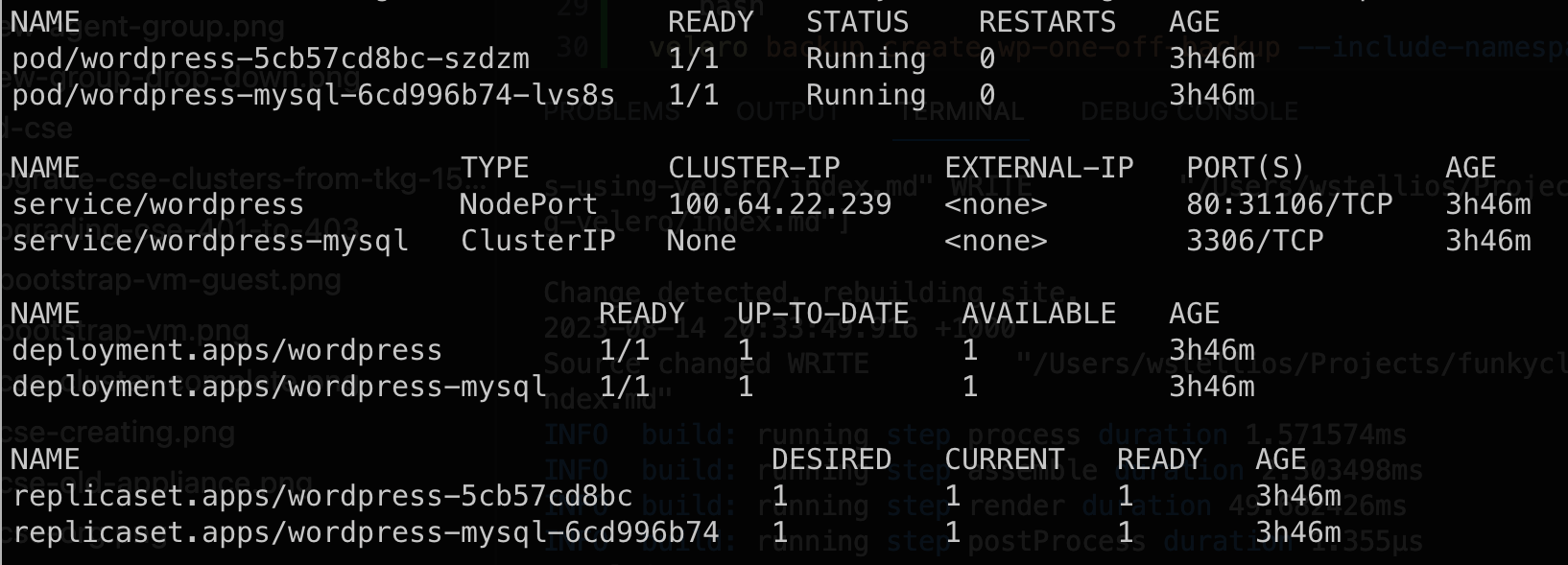

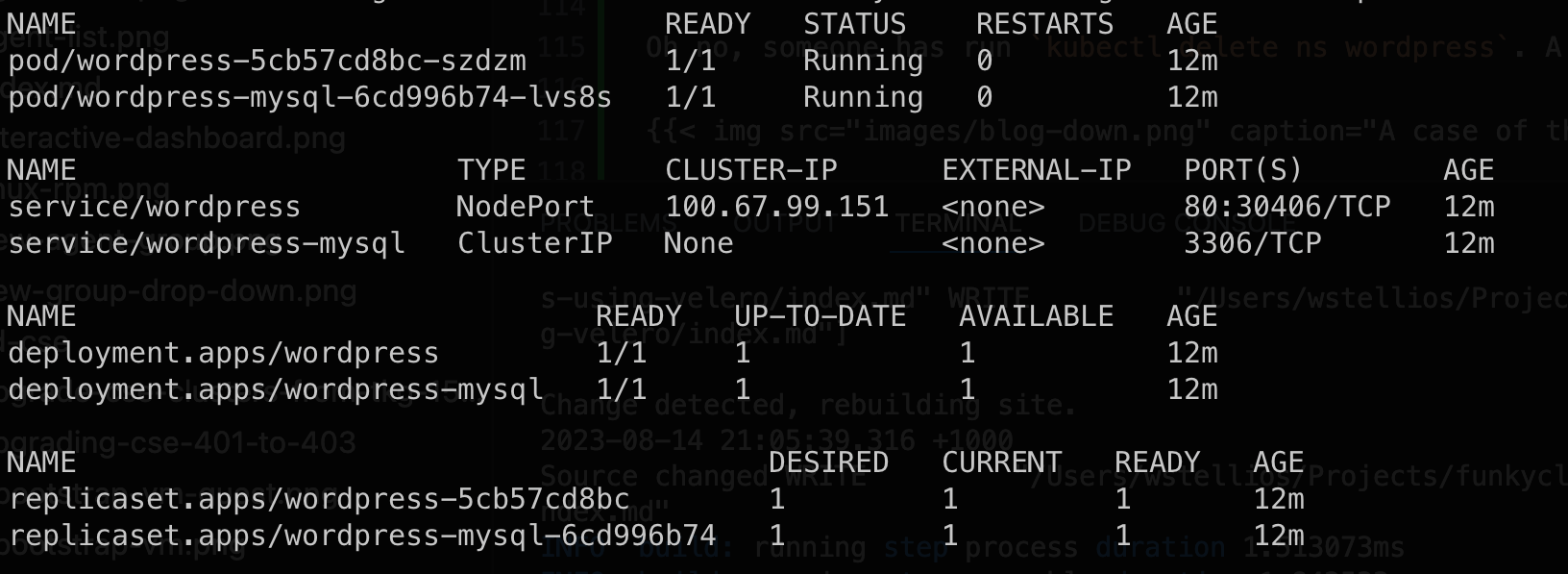

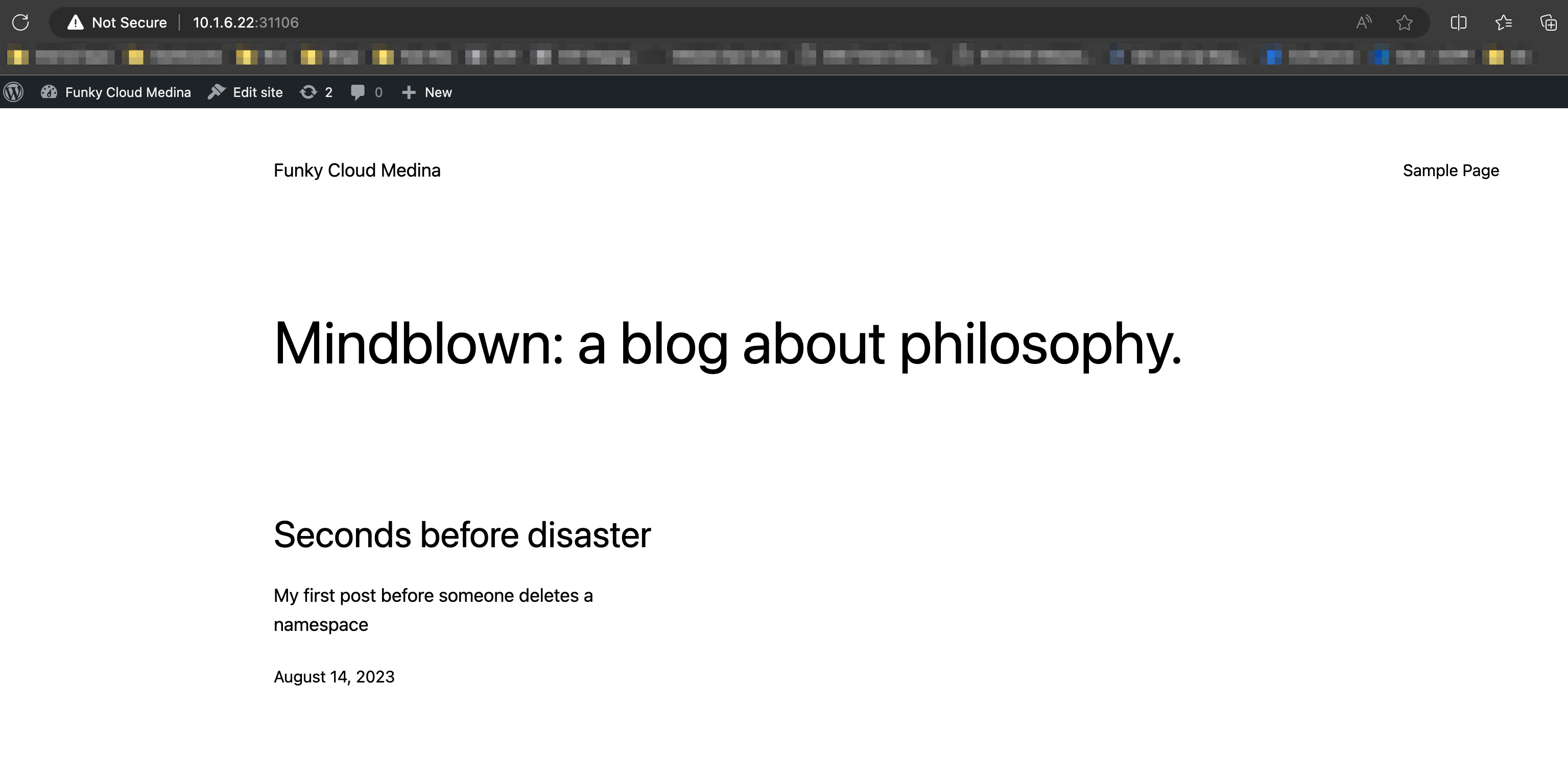

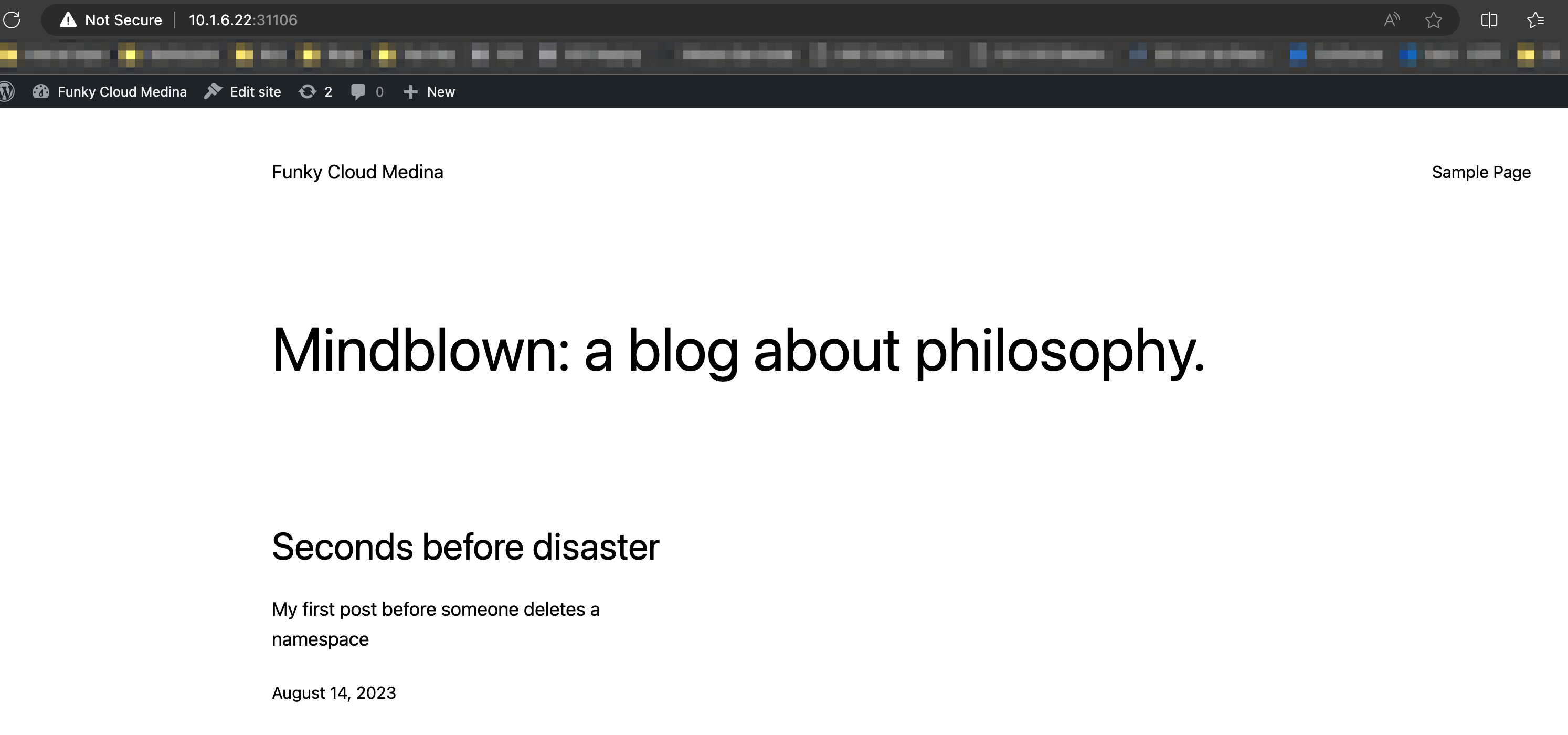

For this post, I have deployed a demo Wordpress instance (yea let’s all laugh at the typical Wordpress demo). Wordpress is a decent test as it has persistent application and database data that we want to protect.

I’ve deployed Wordpress using the basic walkthrough available here: https://kubernetes.io/docs/tutorials/stateful-application/mysql-wordpress-persistent-volume/.

Note: I don’t have a LoadBalancer configured in this cluster, so I changed the Wordpress app layer Service type to NodePort so I could access it.

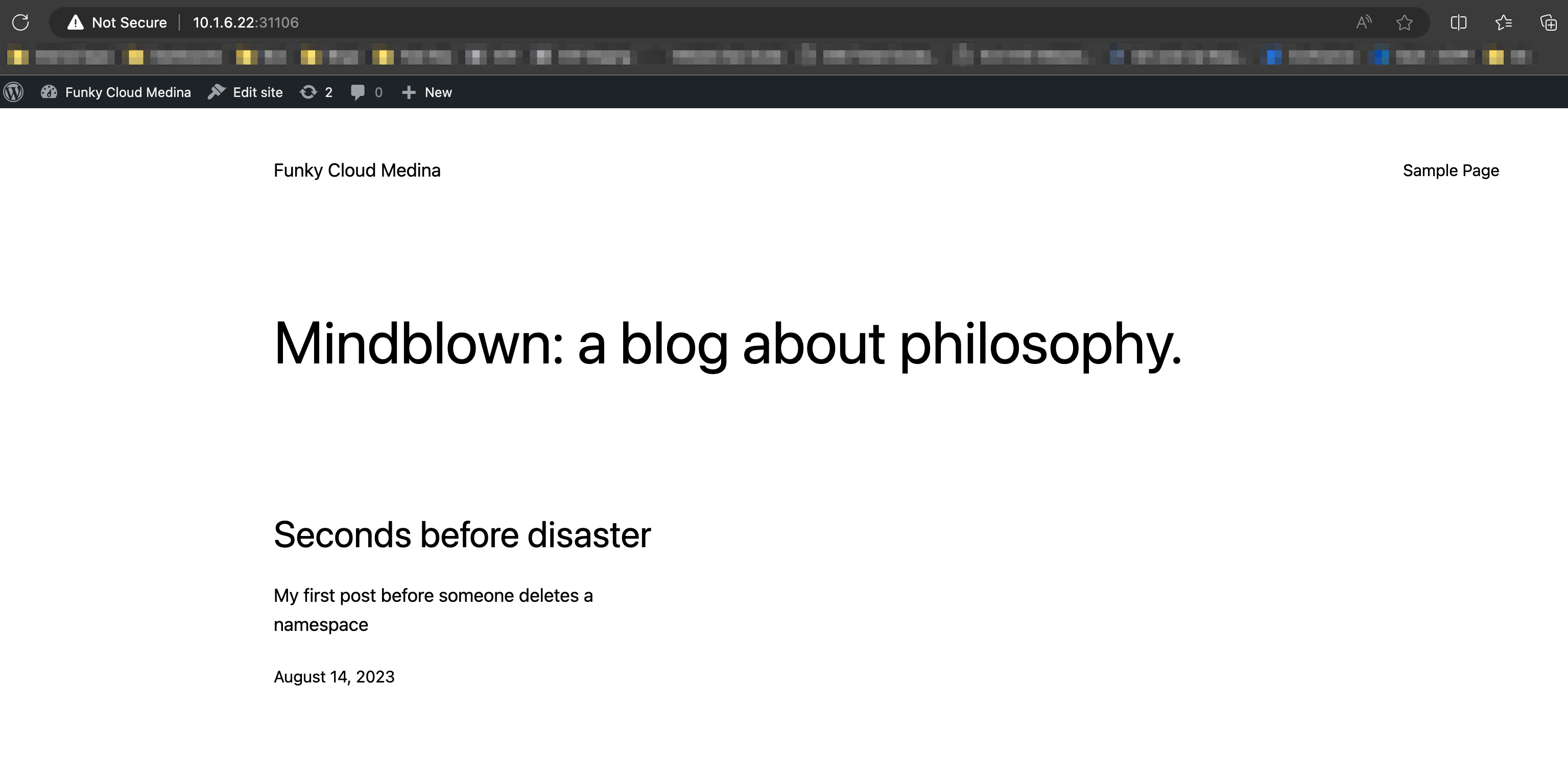

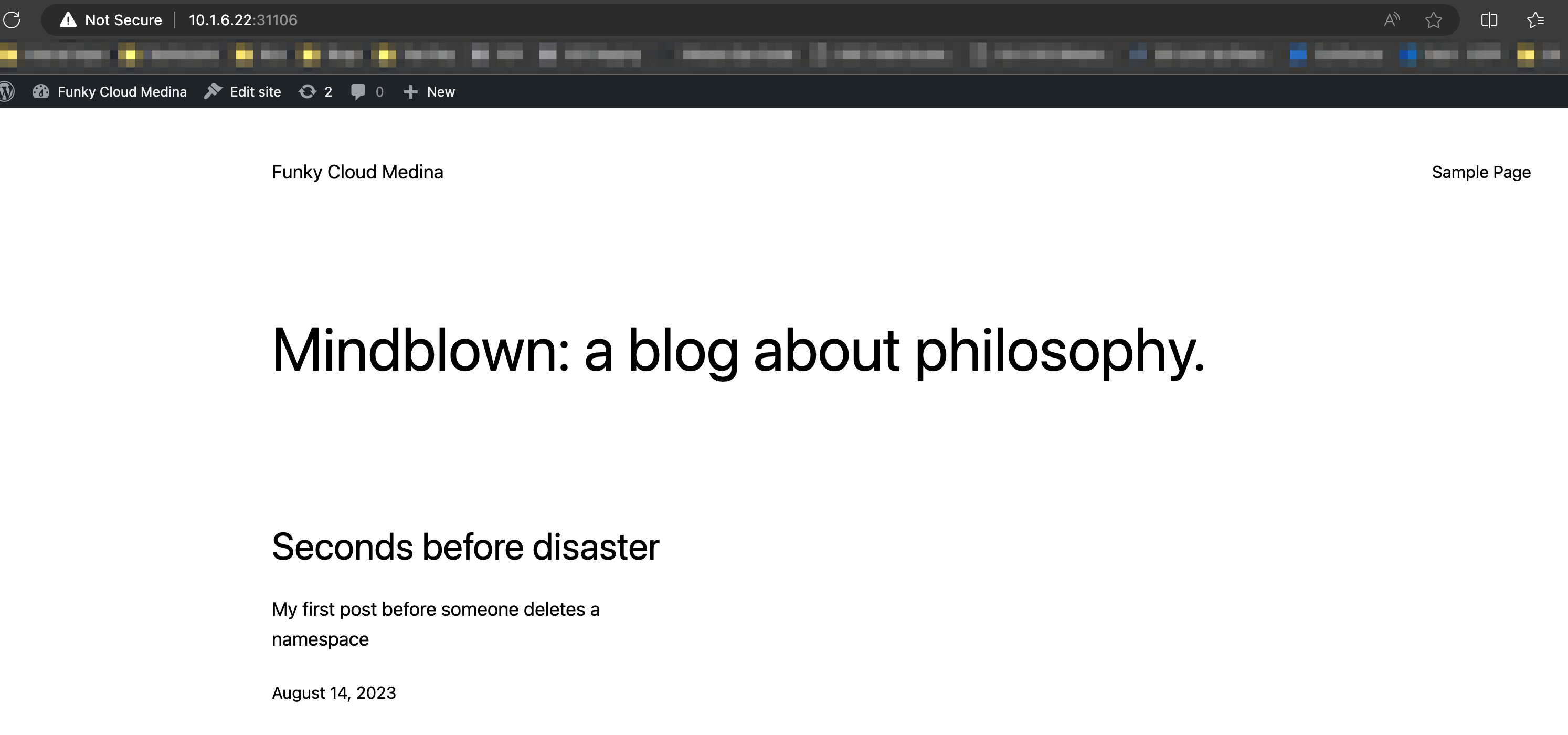

You can see I have a great first post in my victim blog platform:

Create a one-off backup job

Let’s create a one-off backup of our deployment using the Velero CLI. Remember, the CLI will use your currently active kubeconfig context to interact with the API.

1

| velero backup create wp-one-off-backup --include-namespaces wordpress --default-volumes-to-fs-backup

|

With this command we’ve requested a new backup job be created using the backup job name wp-one-off-backup and we’re targeting all resources in the namespace wordpress. Note that one-off backup jobs require unique names. The final flag --default-volumes-to-fs-backup instructs Velero to process the attached volumes (PVCs) for File-System Backup using Restic instead of attempting snapshot based backups.

You’ll get this response:

1

2

| Backup request "wp-one-off-backup" submitted successfully.

Run `velero backup describe wp-one-off-backup` or `velero backup logs wp-one-off-backup` for more details.

|

You can view all backup jobs recorded by Velero by running velero backup get:

1

2

| NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

wp-one-off-backup InProgress 0 0 2023-08-14 20:41:37 +1000 AEST 29d default <none>

|

Let’s view the details of the backup job by running velero backup describe wp-one-off-backup:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

| Name: wp-one-off-backup

Namespace: velero

Labels: velero.io/storage-location=default

Annotations: velero.io/source-cluster-k8s-gitversion=v1.23.8+vmware.2

velero.io/source-cluster-k8s-major-version=1

velero.io/source-cluster-k8s-minor-version=23

Phase: Completed

Namespaces:

Included: wordpress

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: auto

Label selector: <none>

Storage Location: default

Velero-Native Snapshot PVs: auto

TTL: 720h0m0s

CSISnapshotTimeout: 10m0s

ItemOperationTimeout: 1h0m0s

Hooks: <none>

Backup Format Version: 1.1.0

Started: 2023-08-14 20:41:37 +1000 AEST

Completed: 2023-08-14 20:42:19 +1000 AEST

Expiration: 2023-09-13 20:41:37 +1000 AEST

Total items to be backed up: 20

Items backed up: 20

Velero-Native Snapshots: <none included>

restic Backups (specify --details for more information):

Completed: 2

|

Have a look at the Phase line and you’ll see the backup job has completed its run. You’ll only see this once all files have ended up at the destination bucket.

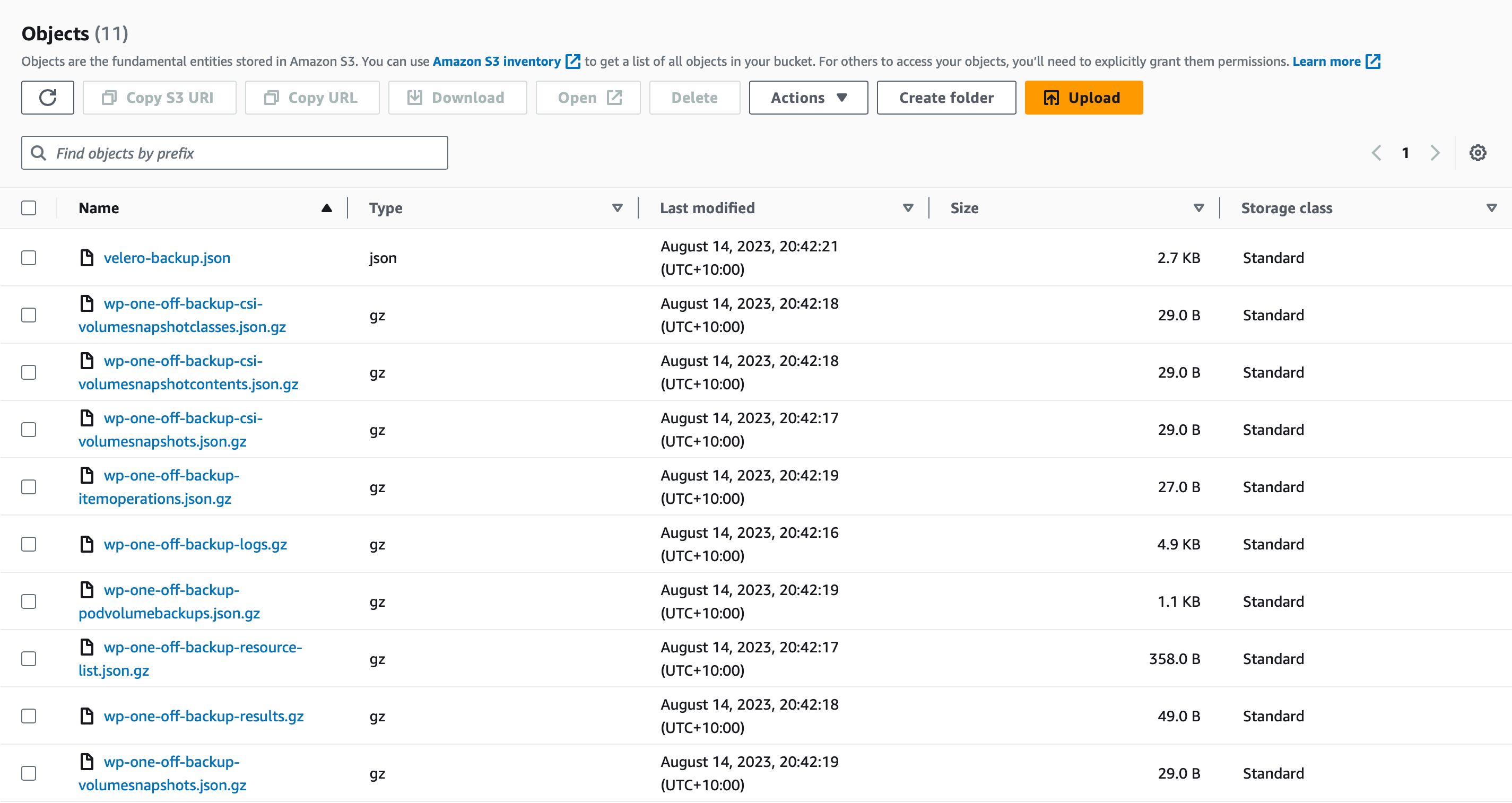

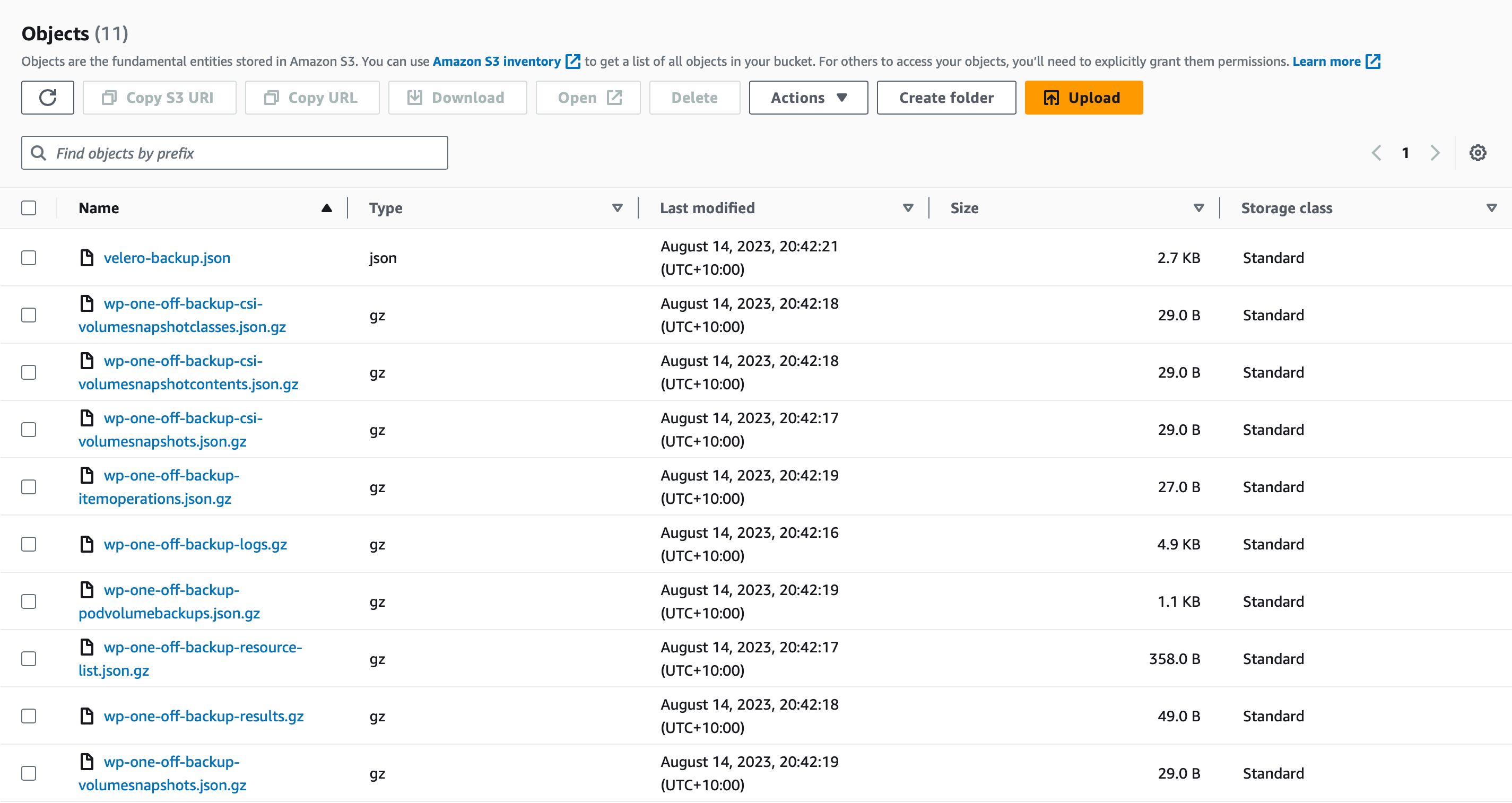

Switching over to my bucket object list in AWS S3, we can see the backup directory has been created and inside it the backup data of the Velero backup job.

That’s literally it. The contents of the PVC mounts in the container filesystem have been tarballed and sent to S3 by Velero, along with the namespace and details of all objects deployed under the wordpress namespace.

When the backup job has completed, the Phase line is marked as Complete.

Create a restore job

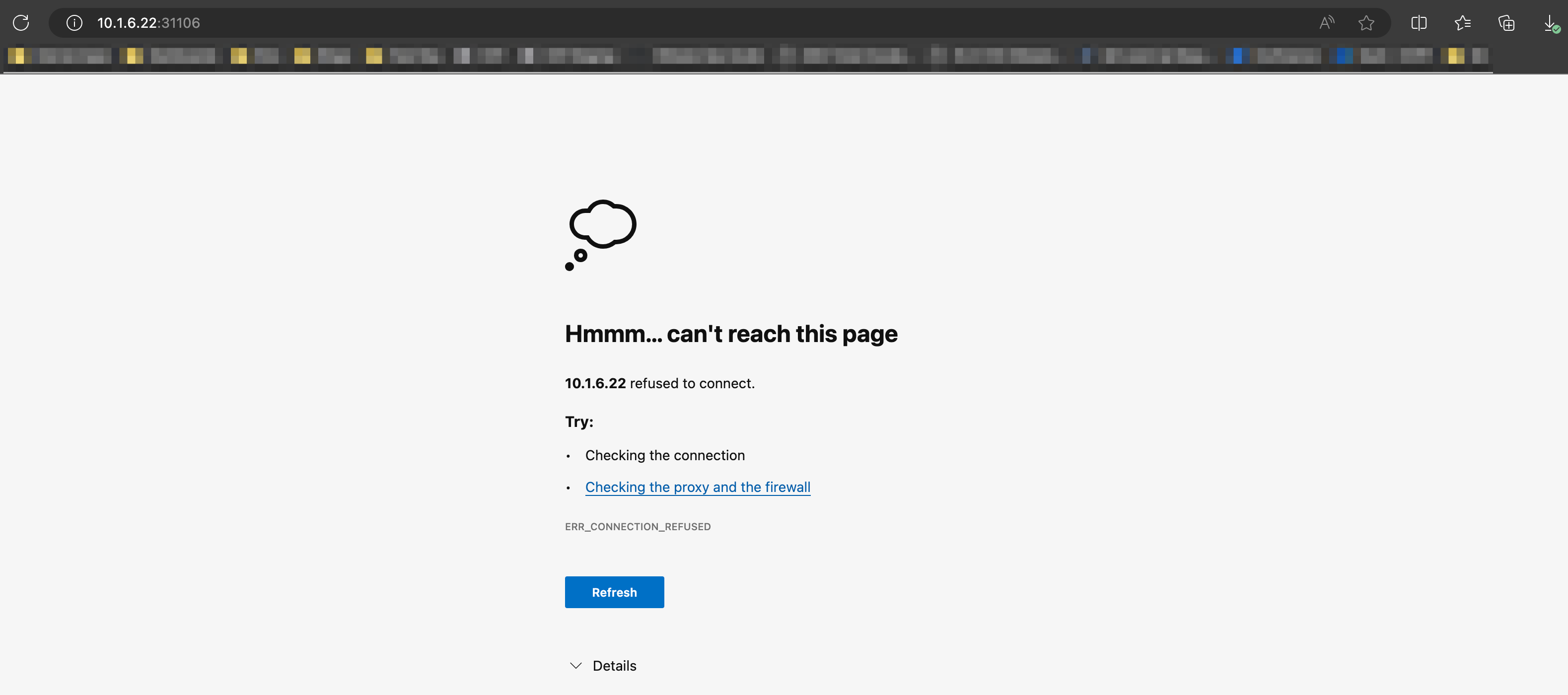

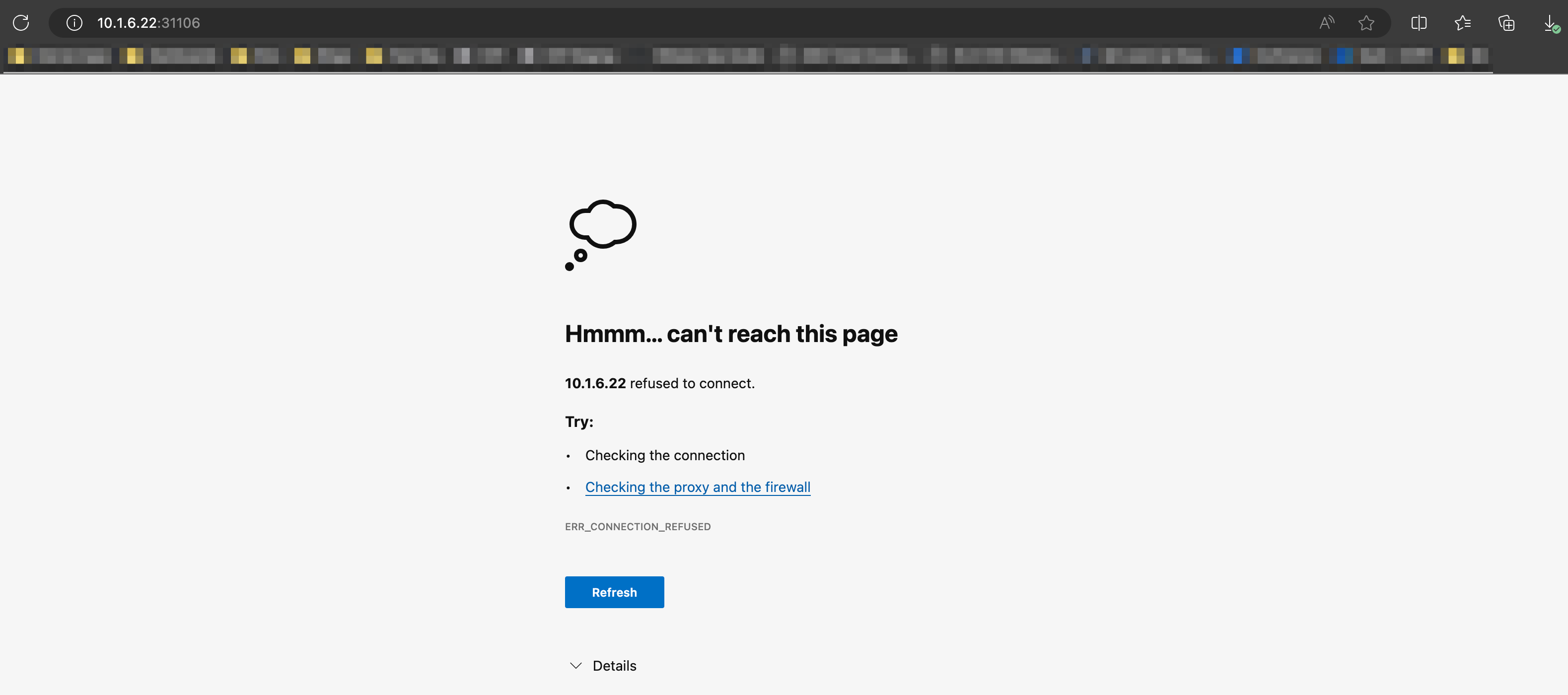

Oh no, someone has run kubectl delete ns wordpress. A classic case of pebcak.

Lucky for us we created a one-off backup just moments ago.

Into a namespace of the same name

By default, Velero will restore the application and data into the original namespace. If it doesn’t exist, it’ll recreate it.

As I mentioned earlier, everything is a ‘job’. So, we need to create a restore job.

1

| velero restore create wp-restore-from-pebcak --from-backup wp-one-off-backup

|

You’ll get this response:

1

2

| Restore request "wp-restore-from-pebcak" submitted successfully.

Run `velero restore describe wp-restore-from-pebcak` or `velero restore logs wp-restore-from-pebcak` for more details.

|

Let’s check the status of the restore with

1

| velero restore describe wp-restore-from-pebcak --details`

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

| Name: wp-restore-from-pebcak

Namespace: velero

Labels: <none>

Annotations: <none>

Phase: Completed

Total items to be restored: 20

Items restored: 20

Started: 2023-08-14 20:54:20 +1000 AEST

Completed: 2023-08-14 20:55:31 +1000 AEST

Warnings:

Velero: <none>

Cluster: <none>

Namespaces:

wordpress: could not restore, ConfigMap "kube-root-ca.crt" already exists. Warning: the in-cluster version is different than the backed-up version.

Backup: wp-one-off-backup

Namespaces:

Included: all namespaces found in the backup

Excluded: <none>

Resources:

Included: *

Excluded: nodes, events, events.events.k8s.io, backups.velero.io, restores.velero.io, resticrepositories.velero.io, csinodes.storage.k8s.io, volumeattachments.storage.k8s.io, backuprepositories.velero.io

Cluster-scoped: auto

Namespace mappings: <none>

Label selector: <none>

Restore PVs: auto

restic Restores:

Completed:

wordpress/wordpress-5cb57cd8bc-szdzm: wordpress-persistent-storage

wordpress/wordpress-mysql-6cd996b74-lvs8s: mysql-persistent-storage

Existing Resource Policy: <none>

ItemOperationTimeout: 1h0m0s

Preserve Service NodePorts: auto

Resource List:

apps/v1/Deployment:

- wordpress/wordpress(created)

- wordpress/wordpress-mysql(created)

apps/v1/ReplicaSet:

- wordpress/wordpress-5cb57cd8bc(created)

- wordpress/wordpress-mysql-6cd996b74(created)

discovery.k8s.io/v1/EndpointSlice:

- wordpress/wordpress-75z9x(created)

- wordpress/wordpress-mysql-dhqlq(created)

v1/ConfigMap:

- wordpress/kube-root-ca.crt(failed)

v1/Endpoints:

- wordpress/wordpress(created)

- wordpress/wordpress-mysql(created)

v1/Namespace:

- wordpress(created)

v1/PersistentVolume:

- pvc-c07309d6-4ff1-4a5f-941d-c2ee0d27c82e(skipped)

- pvc-d31f6710-8895-4406-bd62-b35faa43e163(skipped)

v1/PersistentVolumeClaim:

- wordpress/mysql-pv-claim(created)

- wordpress/wp-pv-claim(created)

v1/Pod:

- wordpress/wordpress-5cb57cd8bc-szdzm(created)

- wordpress/wordpress-mysql-6cd996b74-lvs8s(created)

v1/Secret:

- wordpress/default-token-n8sqv(skipped)

v1/Service:

- wordpress/wordpress(created)

- wordpress/wordpress-mysql(created)

v1/ServiceAccount:

- wordpress/default(skipped)

|

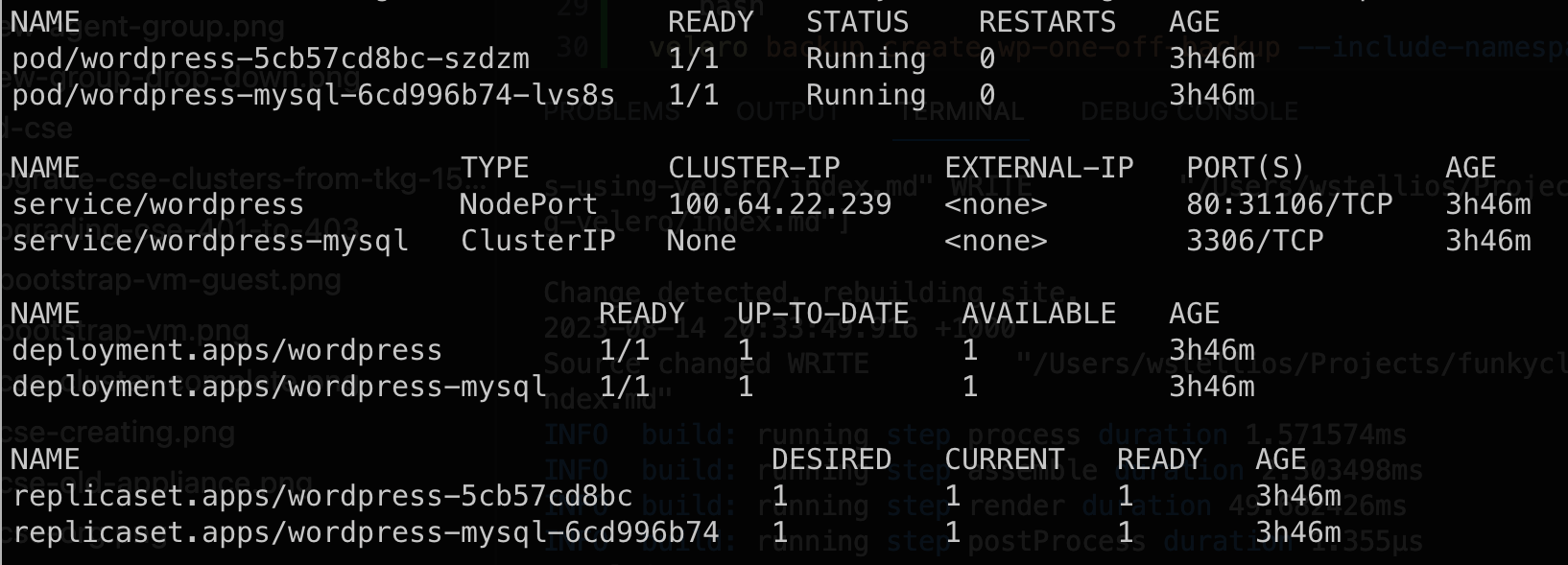

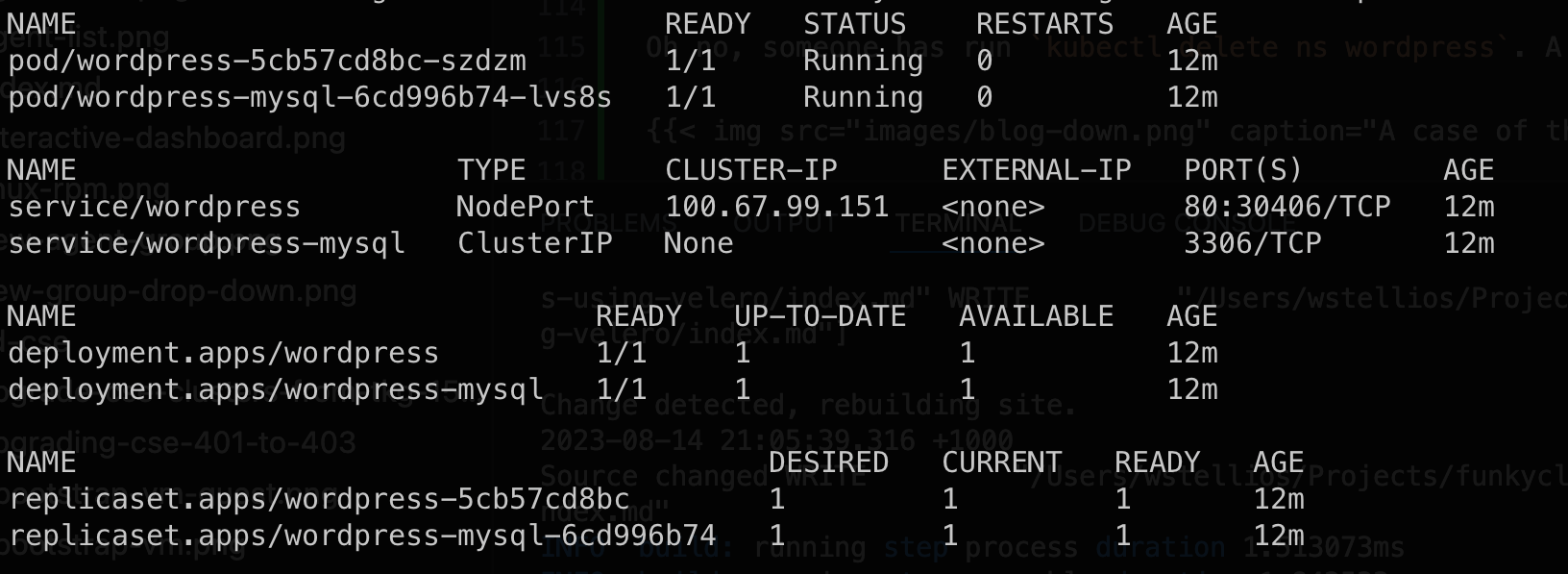

With the --details flag, we can see a great amount of detail for the restore job. What I want to draw your attention to is the Resource List section at the end. Here you can see every Kubernetes object being restored.

Depending on the amount of data in the backup set, the restore could be a minute or an entire day.

Remember, we are restoring from a File-System Backup job, which means the PVCs and Deployments will all be recreated first, with each container having an init container launched (a Restic container) to unpack the backed up data into the PVC before launching the application container. Time will ultimately depend on the dataset, compute power of the node, and network bandwidth available to pull the data from whichever S3 endpoint you’re using.

As a side note, I hit an issue with the application Service restoring with a randomly generated NodePort. After the restore, I couldn’t reach Wordpress. A simple kubectl edit service wordpress -n wordpress and modifying the NodePort back to the original port made it accessible.

Sure, screenshots with no timestamps might not be believable but how else am I supposed to show it.

Into a different namespace

Let’s say you want to restore the application into a different namespace but the same cluster because your original namespace was awful (maybe it was prod-test-2-final). Whatever your reason is, Velero makes it very easy.

As you can imagine, the restore is another job creation but with a different flag:

1

| velero restore create wp-restore-from-pebcak-new-ns --from-backup wp-one-off-backup --namespace-mappings wordpress:funkycloudmedina-wordpress

|

The new flag --namespace-mappings requires a simple map of the old namespace to the new namespace: old-ns:new-ns. If the backup set that you’re restoring contains data from multiple namespaces and you want to remap multiple namespaces for the restore, you can string multiple mappings together like so: old-ns-1:new-ns-1,old-ns-2:new-ns-2.

You’ll also notice the name of the restore job wp-restore-from-pebcak-new-ns is different. Restore jobs need unique names, just like backup jobs.

Track the progress of your restore job with this:

1

| velero restore describe wp-restore-from-pebcak-new-ns --details

|

Take a look at the restore job details and you’ll see the namespace remapping.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

| Name: wp-restore-from-pebcak-new-ns

Namespace: velero

Labels: <none>

Annotations: <none>

Phase: Completed

Total items to be restored: 20

Items restored: 20

Started: 2023-08-14 21:36:00 +1000 AEST

Completed: 2023-08-14 21:37:07 +1000 AEST

Warnings:

Velero: <none>

Cluster: <none>

Namespaces:

funkycloudmedina-wordpress: could not restore, ConfigMap "kube-root-ca.crt" already exists. Warning: the in-cluster version is different than the backed-up version.

Backup: wp-one-off-backup

Namespaces:

Included: all namespaces found in the backup

Excluded: <none>

Resources:

Included: *

Excluded: nodes, events, events.events.k8s.io, backups.velero.io, restores.velero.io, resticrepositories.velero.io, csinodes.storage.k8s.io, volumeattachments.storage.k8s.io, backuprepositories.velero.io

Cluster-scoped: auto

Namespace mappings: wordpress=funkycloudmedina-wordpress

Label selector: <none>

Restore PVs: auto

restic Restores:

Completed:

funkycloudmedina-wordpress/wordpress-5cb57cd8bc-szdzm: wordpress-persistent-storage

funkycloudmedina-wordpress/wordpress-mysql-6cd996b74-lvs8s: mysql-persistent-storage

Existing Resource Policy: <none>

ItemOperationTimeout: 1h0m0s

Preserve Service NodePorts: auto

Resource List:

apps/v1/Deployment:

- funkycloudmedina-wordpress/wordpress(created)

- funkycloudmedina-wordpress/wordpress-mysql(created)

apps/v1/ReplicaSet:

- funkycloudmedina-wordpress/wordpress-5cb57cd8bc(created)

- funkycloudmedina-wordpress/wordpress-mysql-6cd996b74(created)

discovery.k8s.io/v1/EndpointSlice:

- funkycloudmedina-wordpress/wordpress-75z9x(created)

- funkycloudmedina-wordpress/wordpress-mysql-dhqlq(created)

v1/ConfigMap:

- funkycloudmedina-wordpress/kube-root-ca.crt(failed)

v1/Endpoints:

- funkycloudmedina-wordpress/wordpress(created)

- funkycloudmedina-wordpress/wordpress-mysql(created)

v1/Namespace:

- funkycloudmedina-wordpress(created)

v1/PersistentVolume:

- pvc-c07309d6-4ff1-4a5f-941d-c2ee0d27c82e(skipped)

- pvc-d31f6710-8895-4406-bd62-b35faa43e163(skipped)

v1/PersistentVolumeClaim:

- funkycloudmedina-wordpress/mysql-pv-claim(created)

- funkycloudmedina-wordpress/wp-pv-claim(created)

v1/Pod:

- funkycloudmedina-wordpress/wordpress-5cb57cd8bc-szdzm(created)

- funkycloudmedina-wordpress/wordpress-mysql-6cd996b74-lvs8s(created)

v1/Secret:

- funkycloudmedina-wordpress/default-token-n8sqv(created)

v1/Service:

- funkycloudmedina-wordpress/wordpress(created)

- funkycloudmedina-wordpress/wordpress-mysql(created)

v1/ServiceAccount:

- funkycloudmedina-wordpress/default(skipped)

|

There’s a line just above the Resource List that shows the namespace mapping for the restore Namespace mappings: wordpress=funkycloudmedina-wordpress.

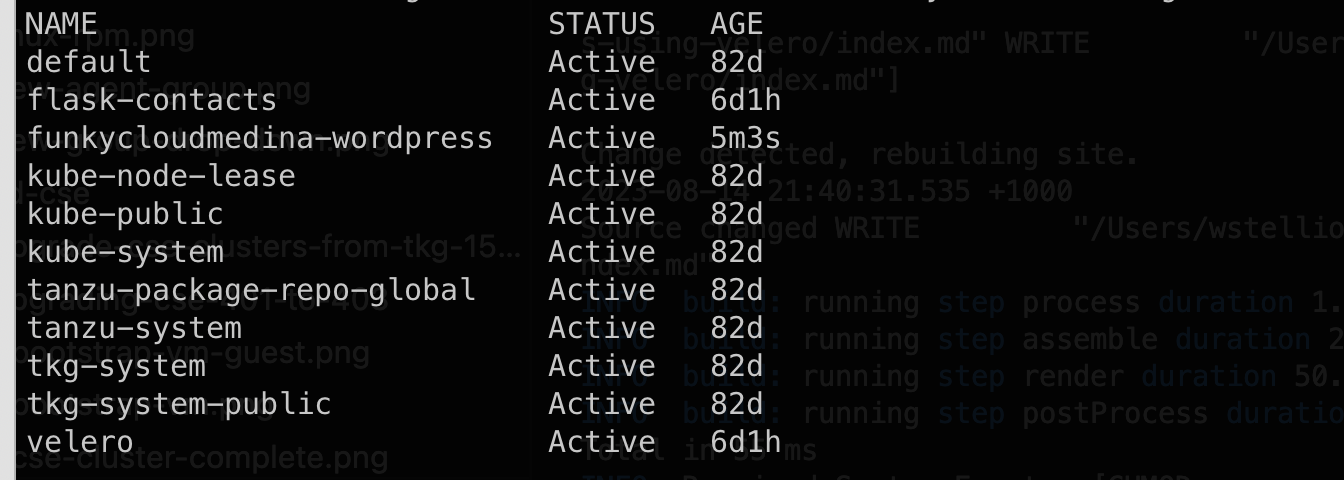

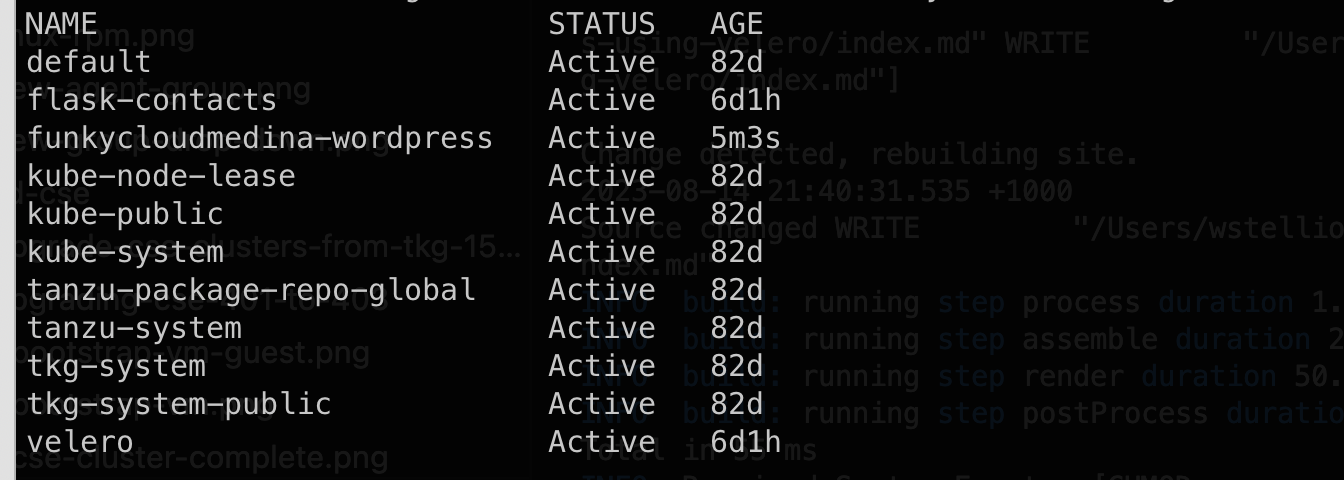

We can view our namespaces and see the new one requested by the restore job:

Create a scheduled backup job

One-off backups are great if you’re wanting to change into a new namespace or migrate to a new cluster, but for a better data protection mechanism, you’ll need something scheduled.

Velero allows the creation of Schedules that will create new backup jobs based on… well, a schedule. Let’s create one now to make sure a backup of our Wordpress deployment is run every hour.

1

| velero schedule create wp-hourly-backup --include-namespaces funkycloudmedina-wordpress --default-volumes-to-fs-backup --schedule="@every 60m"

|

You’ll get this response:

1

| Schedule "wp-hourly-backup" created successfully.

|

The key difference between creating a scheduled backup job and a one-off is the schedule command and the --schedule flag.

Before I get into the details of the --schedule flag, let’s describe the scheduled backup object:

1

| velero schedule describe wp-hourly-backup

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

| Name: wp-hourly-backup

Namespace: velero

Labels: <none>

Annotations: <none>

Phase: Enabled

Paused: false

Schedule: @every 60m

Backup Template:

Namespaces:

Included: funkycloudmedina-wordpress

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: auto

Label selector: <none>

Storage Location:

Velero-Native Snapshot PVs: auto

TTL: 0s

CSISnapshotTimeout: 0s

ItemOperationTimeout: 0s

Hooks: <none>

Last Backup: <never>

|

We can see at the at of the output that a backup hasn’t run yet. Once 60 minutes passes from the time of the schedule creation, a backup job will be created and executed. That’s basically all a schedule is, dynamically creating backup jobs once the time is triggered.

Waiting overnight to see my backup schedule, I can run velero backup get and see new backup jobs in the system:

1

2

3

4

5

6

7

8

9

10

| NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

wp-hourly-backup-20230814210203 Completed 0 0 2023-08-15 07:02:03 +1000 AEST 29d default <none>

wp-hourly-backup-20230814200203 Completed 0 0 2023-08-15 06:02:03 +1000 AEST 29d default <none>

wp-hourly-backup-20230814190203 Completed 0 0 2023-08-15 05:02:03 +1000 AEST 29d default <none>

wp-hourly-backup-20230814180203 Completed 0 0 2023-08-15 04:02:03 +1000 AEST 29d default <none>

wp-hourly-backup-20230814170203 Completed 0 0 2023-08-15 03:02:03 +1000 AEST 29d default <none>

wp-hourly-backup-20230814160203 Completed 0 0 2023-08-15 02:02:03 +1000 AEST 29d default <none>

wp-hourly-backup-20230814150203 Completed 0 0 2023-08-15 01:02:03 +1000 AEST 29d default <none>

wp-hourly-backup-20230814140202 Completed 0 0 2023-08-15 00:02:02 +1000 AEST 29d default <none>

wp-one-off-backup Completed 0 0 2023-08-14 20:41:37 +1000 AEST 29d default <none>

|

You’ll see that the name of the schedule is used for the backup jobs that the schedule created, with the UTC timestamp appended.

Schedule flag times

Velero schedules are based on cron time expression. Looking at the Velero documentation https://velero.io/docs/v1.11/backup-reference/#schedule-a-backup we can see an introduction to the cron expression for the basics. But you’ll see that my example above was an alias of sorts. There are some “Non-Standard scheduling definitions” that you can use instead. For me, it makes it a little easier to read.

Restore from a scheduled backup

Because our schedule created standard jobs anyway, we can use the same restore process as above.

1

| velero restore create wp-restore-from-scheduled-backup --from-backup wp-hourly-backup-20230814210203

|

You’ll see it’s identical to our restore from a one-off backup, only the --from-backup value is the specific backup job run by the schedule.

Modify a schedule

Let’s say you need to modify a backup schedule. Well, it’s not as clean as creating them. We don’t use the Velero CLI to do it, we need to use kubectl to patch the schedule object in the Kubernetes Cluster API.

1

| kubectl patch schedule <schedule-name> -n velero -p '{"spec":{"schedule":"@every 1m"}}' --type=merge

|

Let’s break this up:

kubectl well that’s obviouspatch the kubectl instruction to patch or in other words modify somethingschedule the type of object we want to modify<schedule-name> the name of the Velero schedule you want to modify-n velero the schedule definition is stored in the velero namespace, so we define the namespace that owns the object we’re modifying-p is for “patch” and will contain the actual data we want to push into the object'{"spec":{"schedule":"@every 1m"}}' this is the meat and potatoes of the whole command. We’re feeding a JSON block of the spec > schedule property with a new value of “@every 1m”.--type=merge we’re instructing Kubernetes to effectively merge the new spec JSON we’ve submitted with the existing data for the object, meaning only the “schedule” property will change and nothing else.

Troubleshooting backup jobs

There could be a million reasons why your backups or restores aren’t working. The best thing to check is the logs for the backup or restore job by running:

1

| velero logs <backup-job-name>

|

And you’ll get a massive dump of log data to inspect:

1

2

3

4

5

6

7

| time="2023-08-14T10:42:14Z" level=info msg="Backing up item" backup=velero/wp-one-off-backup logSource="pkg/backup/item_backupper.go:173" name=wordpress-75z9x namespace=wordpress resource=endpointslices.discovery.k8s.io

time="2023-08-14T10:42:14Z" level=info msg="Backed up 19 items out of an estimated total of 20 (estimate will change throughout the backup)" backup=velero/wp-one-off-backup logSource="pkg/backup/backup.go:405" name=wordpress-75z9x namespace=wordpress progress= resource=endpointslices.discovery.k8s.io

time="2023-08-14T10:42:14Z" level=info msg="Processing item" backup=velero/wp-one-off-backup logSource="pkg/backup/backup.go:365" name=wordpress-mysql-dhqlq namespace=wordpress progress= resource=endpointslices.discovery.k8s.io

time="2023-08-14T10:42:14Z" level=info msg="Backing up item" backup=velero/wp-one-off-backup logSource="pkg/backup/item_backupper.go:173" name=wordpress-mysql-dhqlq namespace=wordpress resource=endpointslices.discovery.k8s.io

time="2023-08-14T10:42:14Z" level=info msg="Backed up 20 items out of an estimated total of 20 (estimate will change throughout the backup)" backup=velero/wp-one-off-backup logSource="pkg/backup/backup.go:405" name=wordpress-mysql-dhqlq namespace=wordpress progress= resource=endpointslices.discovery.k8s.io

time="2023-08-14T10:42:14Z" level=info msg="Skipping resource customresourcedefinitions.apiextensions.k8s.io, because it's cluster-scoped and only specific namespaces or namespace scope types are included in the backup." backup=velero/wp-one-off-backup logSource="pkg/util/collections/includes_excludes.go:155"

time="2023-08-14T10:42:14Z" level=info msg="Backed up a total of 20 items" backup=velero/wp-one-off-backup logSource="pkg/backup/backup.go:436" progress=

|

The Velero documentation has a much better breakdown of the troubleshooting steps you can take.