I recently posted how to upgrade VMware Cloud Director’s Container Service Extension from 4.0.1 to 4.0.3. The next step in the journey is to configure the new CAPVCD provider (1.0.1) on any existing Kubernetes clusters deployed by CSE, and then upgrade those clusters from TKG 1.5.4 images to TKG 1.6.1 images.

Wait, what am I updating?

Kubernetes clusters deployed by CSE include what’s called a Cluster API Provider (CAP) for VMware Cloud Director (VCD) which is where we get CAPVCD. A Cluster API Provider allows Kubernetes to use native Kubernetes constructs to define, create and manage Kubernetes clusters (itself included). This is what allows a Kubernetes cluster in VCD to monitor itself and rebuild nodes if one fails. The Provider can talk to VCD and request new nodes, etc.

Why do I need to update it?

CAPVCD interacts with the VCD API. More specifically, the Container Service Extension API that’s a part of VCD. CSE versions 4.0.0, 4.0.1 and 4.0.2 supported CAPVCD 1.0.0. CSE 4.0.3 now requires CAPVCD 1.0.1. Any clusters deployed by CSE using any of the older versions will be running CAPVCD 1.0.0 and will require the provider be updated to 1.0.1.

Without the latest version of the provider, it won’t be able to interface with the CSE 4.0.3 API extensions in VCD for cluster operations.

Prepare your Kubernetes cluster(s) by installing CAPVCD 1.0.1

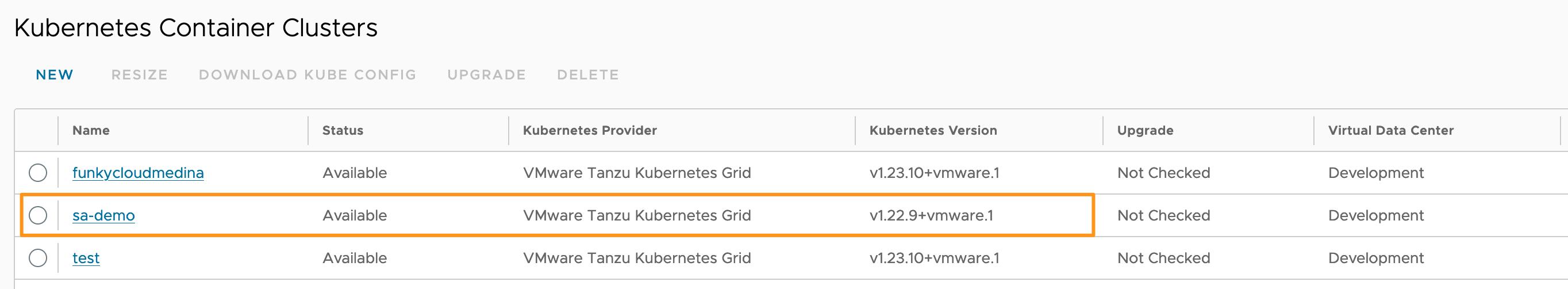

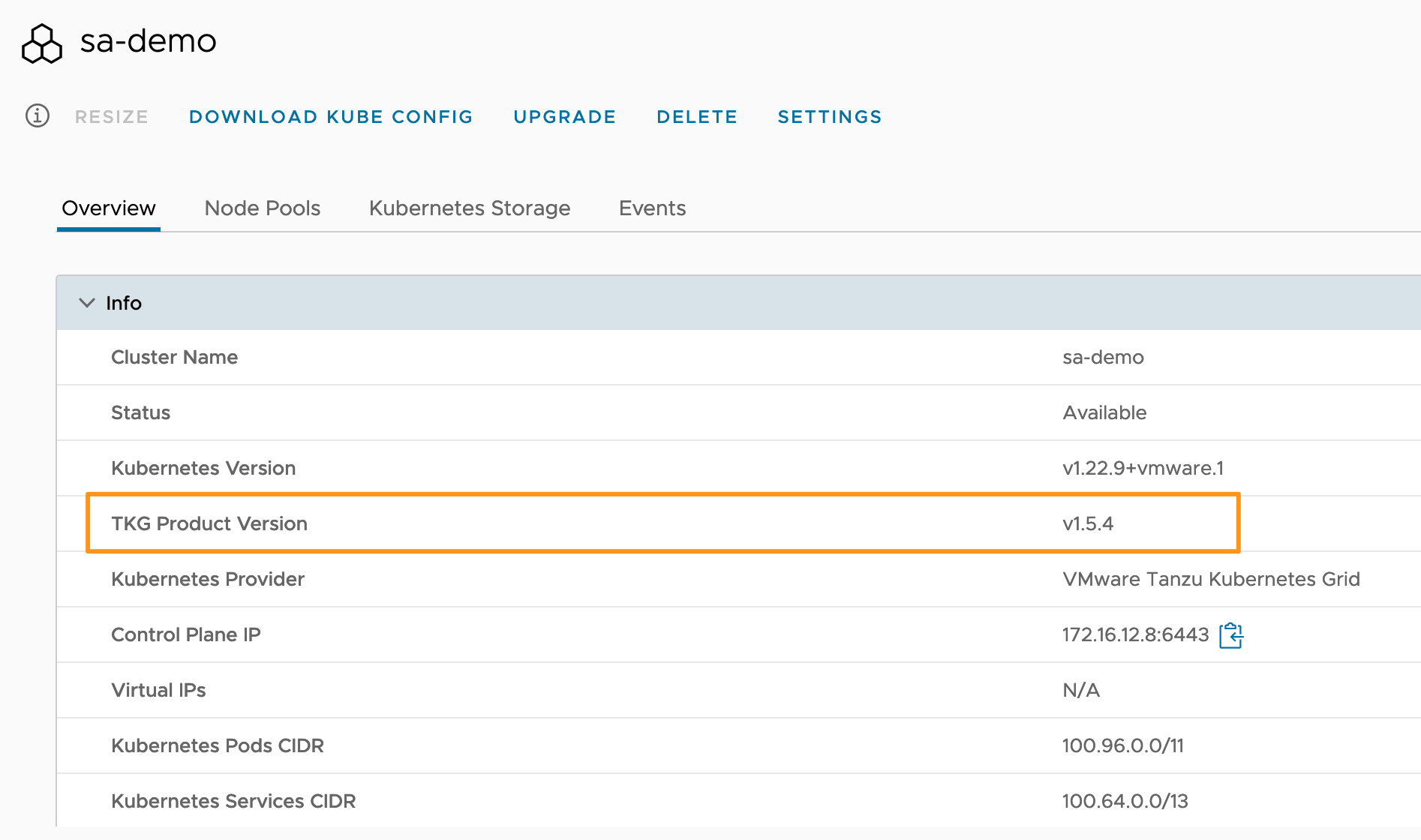

The CSE 4.0.3 release notes include the command/snippet to upgrade CAPVCD on your clusters. For this step, I’m going to use my sa-demo cluster running TKG 1.5.4 originally deployed by CSE 4.0.1:

Install CAPVCD 1.0.1 on TKG 1.5.4 cluster

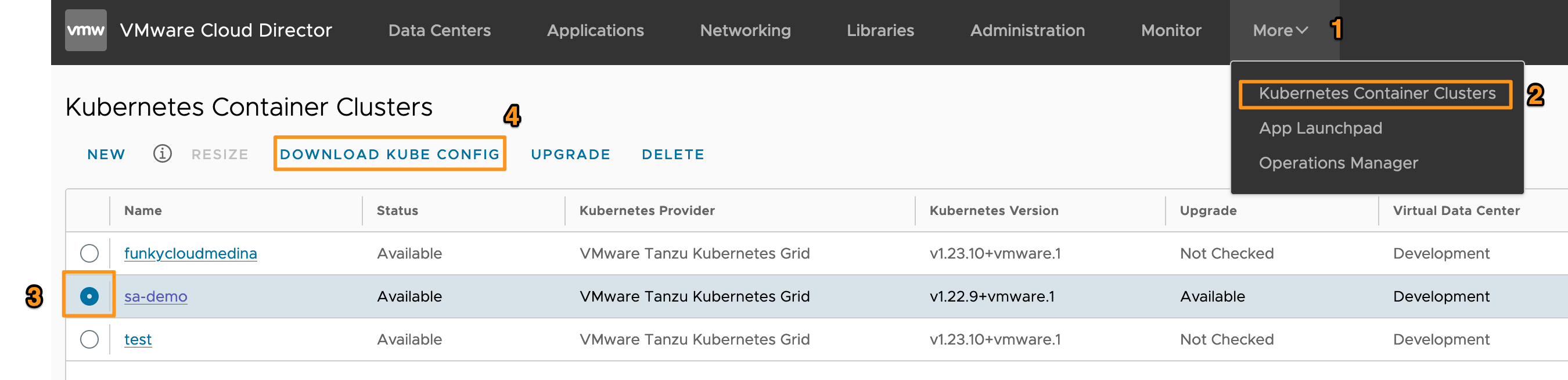

- Login to the Cloud Director tenancy with a user that has the appropriate Kubernetes Cluster rights assigned.

- Navigate to More > Kubernetes Container Clusters and select the radio button for your cluster. Click Download Kube Config.

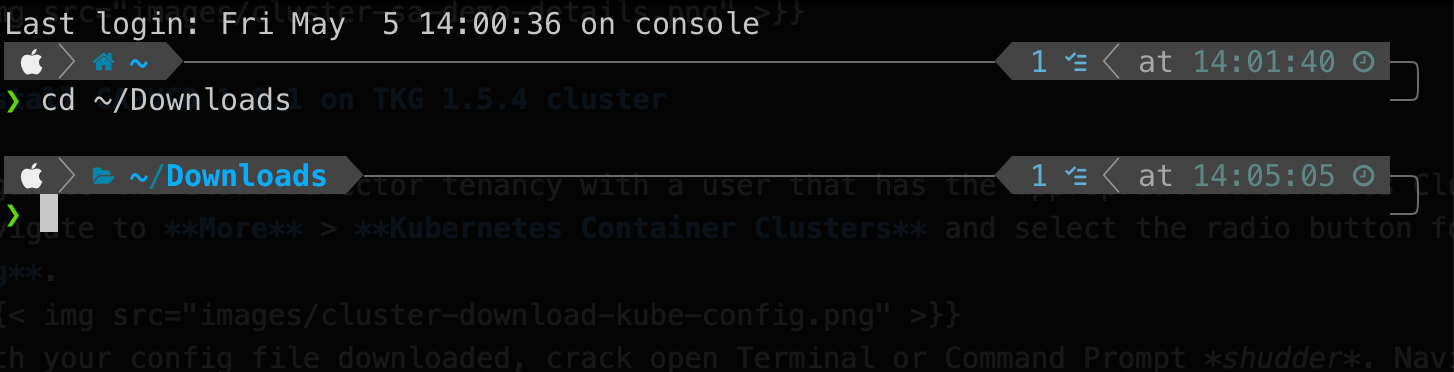

- With your config file downloaded, crack open Terminal or Command Prompt shudder. Navigate to the location of the file download.

- Now, the next command we need to run isThe

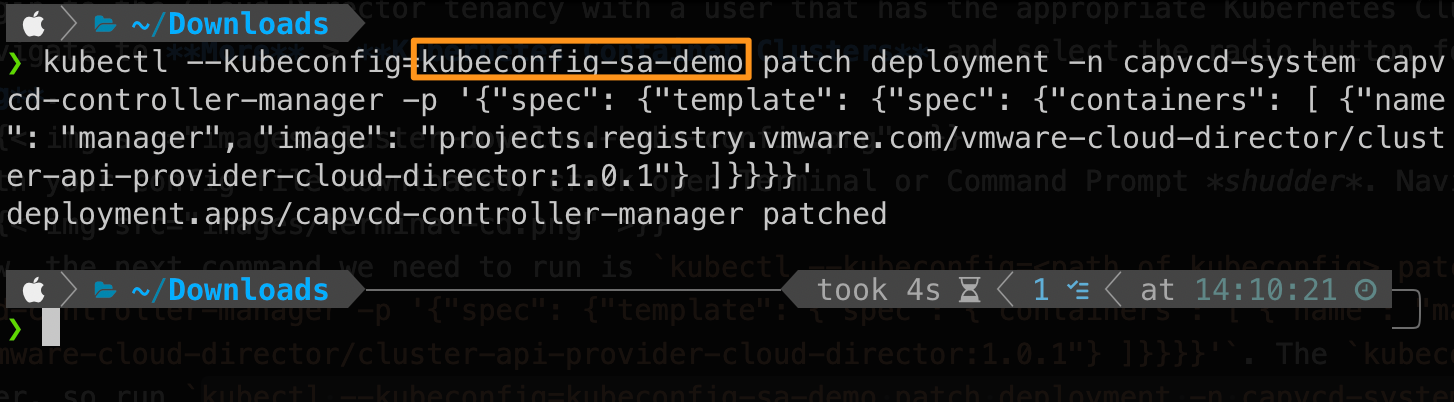

1kubectl --kubeconfig=<path of kubeconfig> patch deployment -n capvcd-system capvcd-controller-manager -p '{"spec": {"template": {"spec": {"containers": [ {"name": "manager", "image": "projects.registry.vmware.com/vmware-cloud-director/cluster-api-provider-cloud-director:1.0.1"} ]}}}}'kubeconfigpath needs to be changed for our cluster, so run1kubectl --kubeconfig=kubeconfig-sa-demo patch deployment -n capvcd-system capvcd-controller-manager -p '{"spec": {"template": {"spec": {"containers": [ {"name": "manager", "image": "projects.registry.vmware.com/vmware-cloud-director/cluster-api-provider-cloud-director:1.0.1"} ]}}}}'

- If the command submits successfully you won’t get a response in the terminal. To check the status of the new CAPVCD deployment, run:

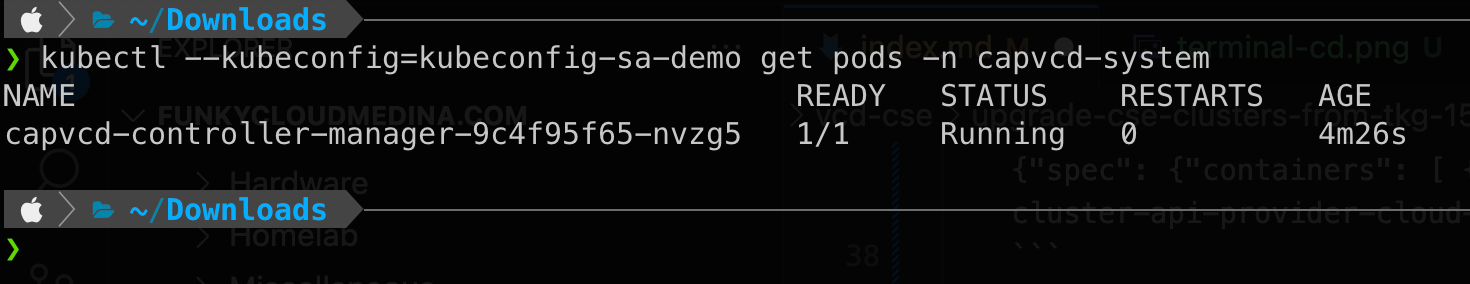

1kubectl --kubeconfig=kubeconfig-sa-demo get pods -n capvcd-system - You’ll see the status of the pods that provide the Cluster API functionality for VCD:

- Running

kubectl --kubeconfig=kubeconfig-sa-demo get deployments capvcd-controller-manager -n capvcd-systemwill return the state of the CAPVCD deployment:

- I think it’s safe to say the update has been completed and we can move on to upgrading the cluster!

Upgrading CSE clusters from TKG 1.5.4 to TKG 1.6.1

With our cluster presumably running CAPVCD 1.0.1 we can move onto the TKG upgrade available to us through the CSE UI Plugin.

- Login to the Cloud Director tenancy with a user that has the appropriate Kubernetes Cluster rights assigned.

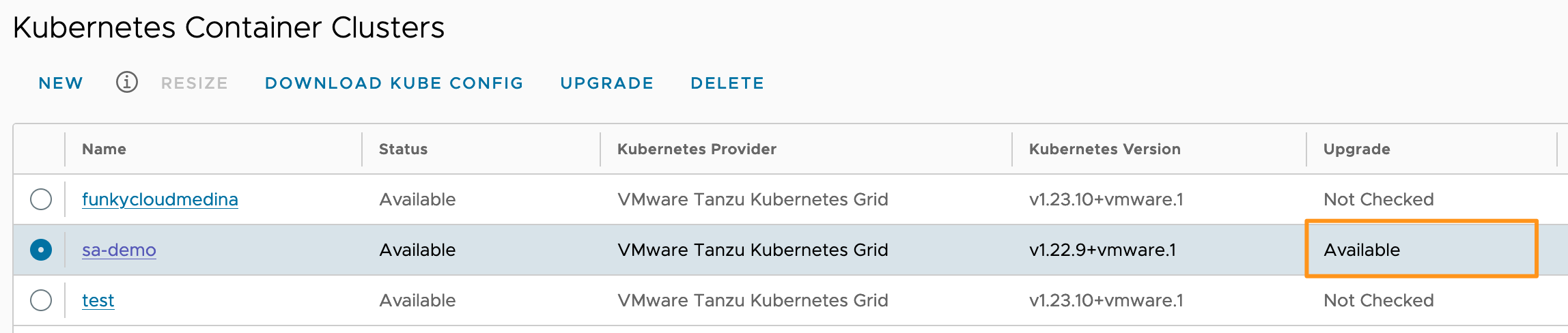

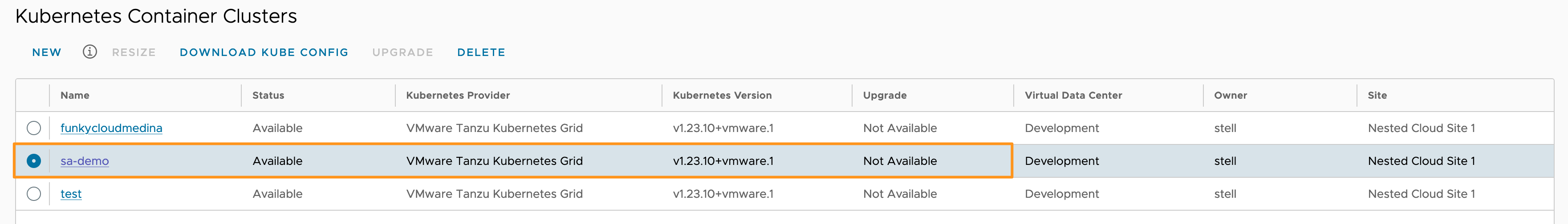

- Navigate to More > Kubernetes Container Clusters and select the radio button for your cluster. Notice the Upgrade column updates to show that there is an upgrade available.

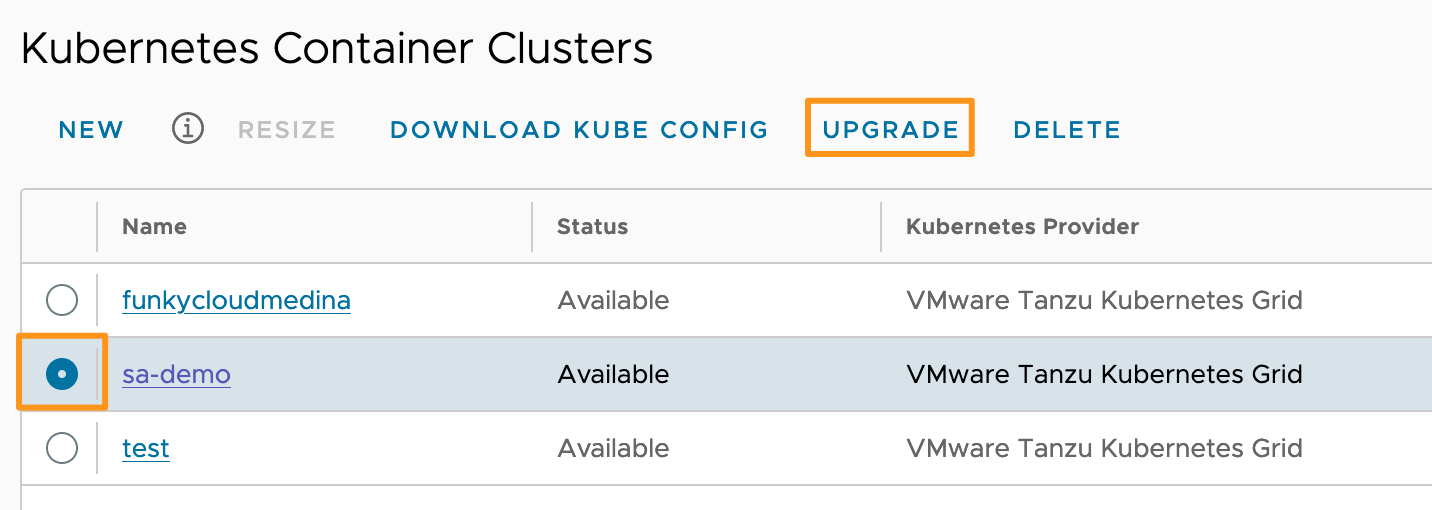

- With the cluster radio button selected, click the UPGRADE button.

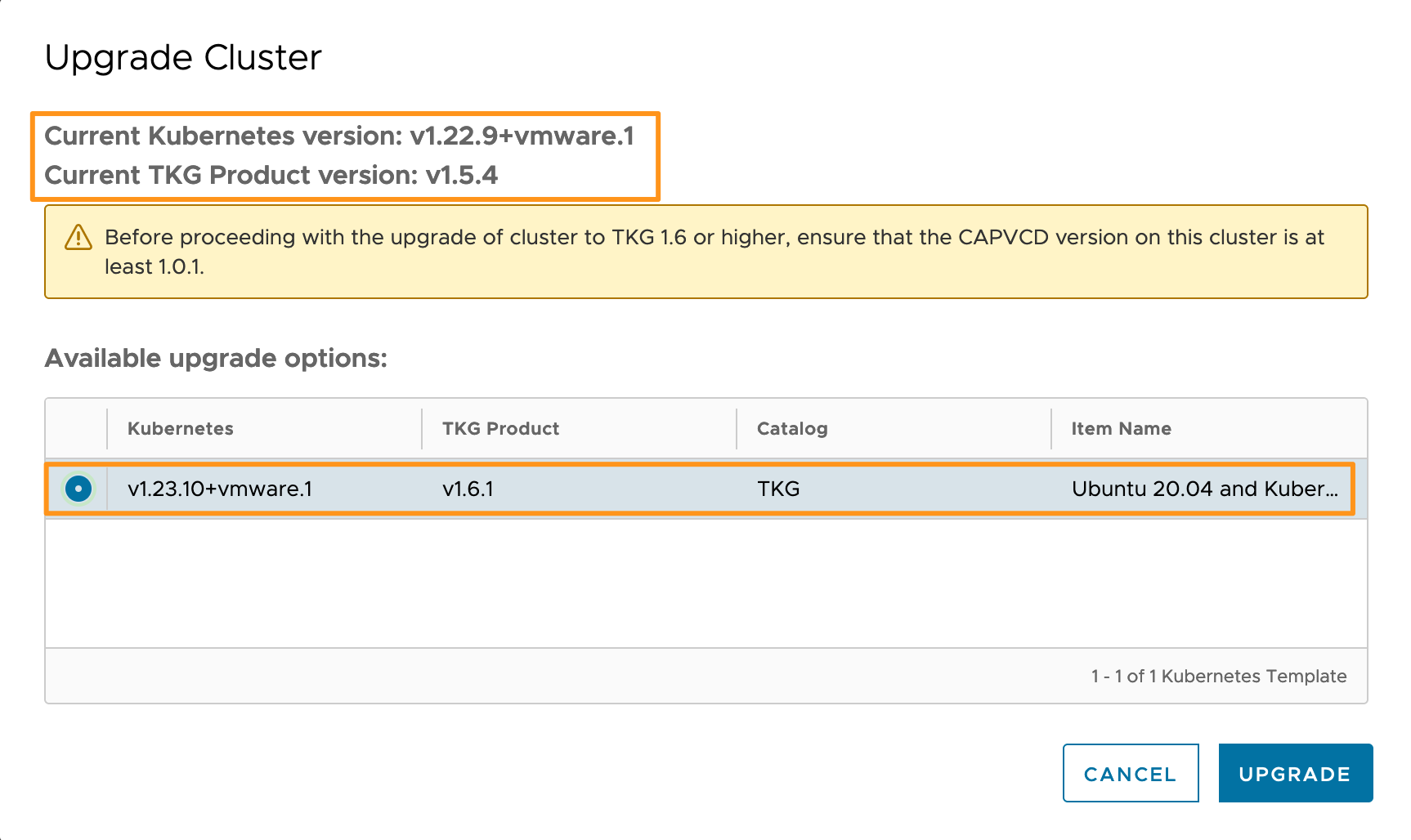

- The upgrade prompt will detail the source and destination versions for both the TKG image and the Tanzu Kubernetes Runtime (TKr) of your cluster. My lab only displays a single destination version as I do not have multiple TKr’s uploaded in Cloud Director for TKG 1.5.4 or TKG 1.6.1.

- Select your target version for the cluster upgrade, and be thankful that you followed the steps previously to upgrade CAPVCD.

- Click UPGRADE.

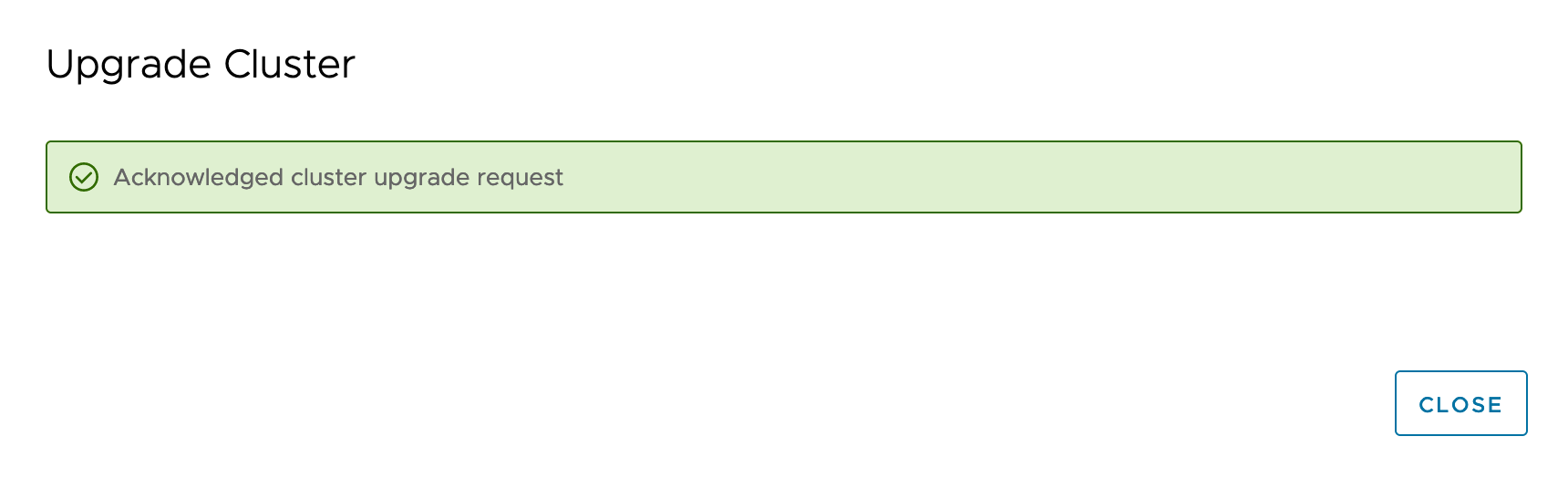

- The CSE UI Plugin will acknowledge the request. Click CLOSE.

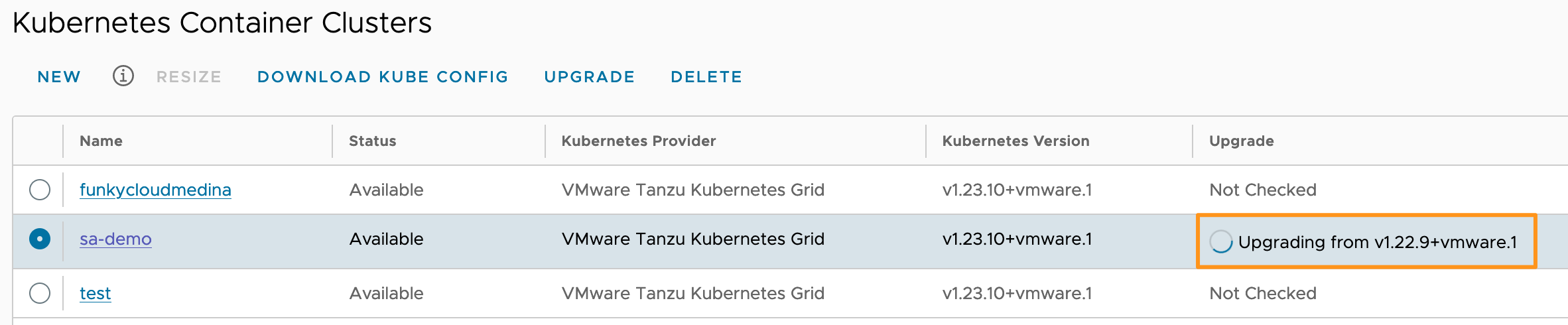

- The UI will show the upgrade of your cluster is in progress:

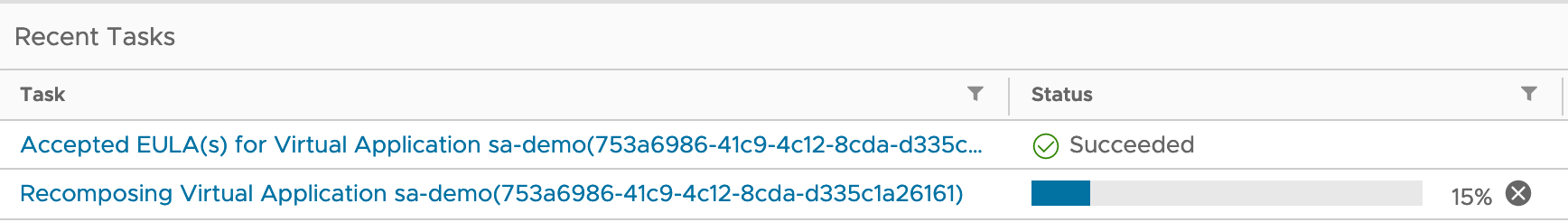

- You’ll also see some VM activities in the VCD Recent Events list:

New nodes will now be added to the cluster. Running pods on the old TKG nodes will be restarted on the new nodes and the old TKG nodes will be removed from VCD.

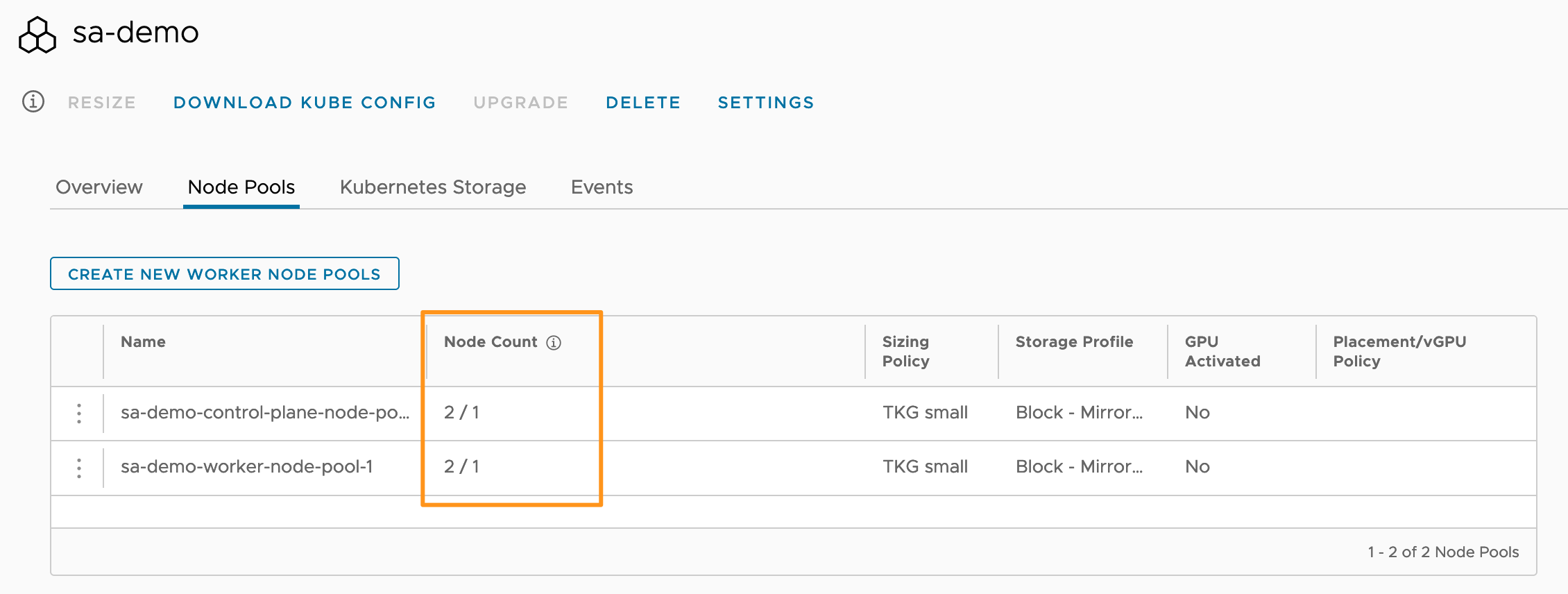

As with the cluster deployment, you can expect some time for this to finish. In my artificially throttled nested lab, I could see the additional control and worker nodes after 30 minutes:

About 10 minutes after the screenshot above, the cluster upgrade was complete and we can see the new TKr version:

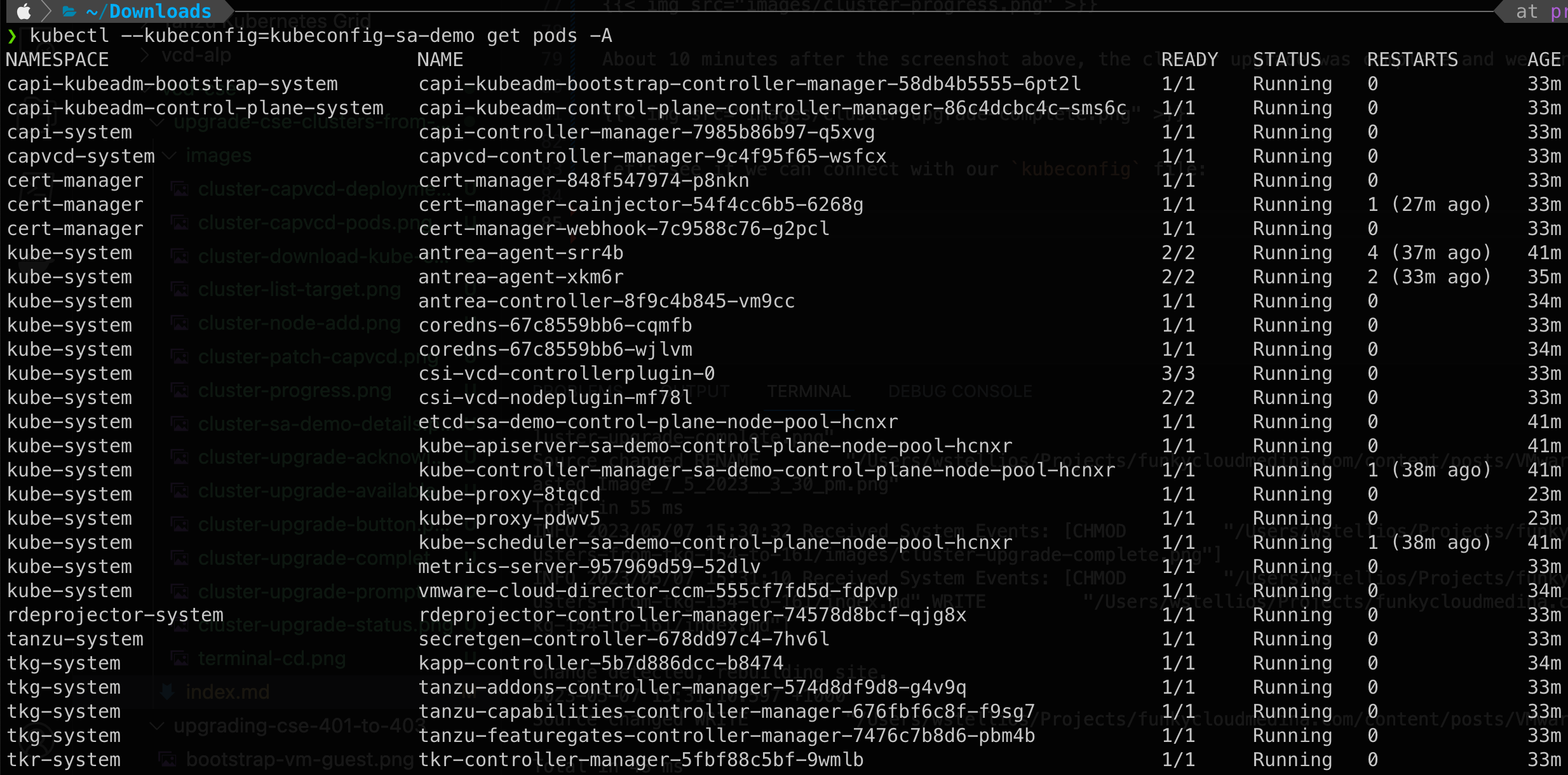

Let’s see if we can connect with our kubeconfig file:

| |

Looks like everything is up now! The restarts are most likely due to some service timeouts in a throttled environment, but all in all, a great success.